How computer vision can spot problems long before a customer notices

In Picnic’s fully-automated fulfilment centre in Utrecht thousands of totes move over more than 50 kilometres of conveyor belts every single day. Our in-house control software decides where every tote should go and when. What that software cannot do today is look inside the moving boxes. We only discover the state of the goods when the tote reaches a picking station, a delivery driver or, worst case, a customer.

That blind spot occasionally hurts. A product can bounce out of the tote, a six-pack can squash a packet of tomatoes or a shopper can accidentally drop the wrong flavour of yoghurt into a bag. Right now we rely on statistical safeguards and clever packing algorithms to make those mistakes rare. Imagine though, if the system could see the problem the moment it happened and nudge the tote to a new destination for getting fixed. That is the promise of adding computer-vision “eyes” to our warehouses.

In this article we will explain the opportunity, the building blocks and the trade-offs we found while turning that promise into a production project.

Why Seeing Matters

In our warehouse, we distinguish between different types of totes. For the two most important types in the warehouse, we define the following use cases.

1. Order totes are the totes that eventually end up at a customer’s doorstep. Computer vision can:

- Verify that the shopper placed the correct number of items.

- Flag items that landed in the wrong bag (so that someone else’s chocolate doesn’t end up in your delivery).

- Spot obvious product mismatches, such as adding the wrong flavour of crisps to the tote.

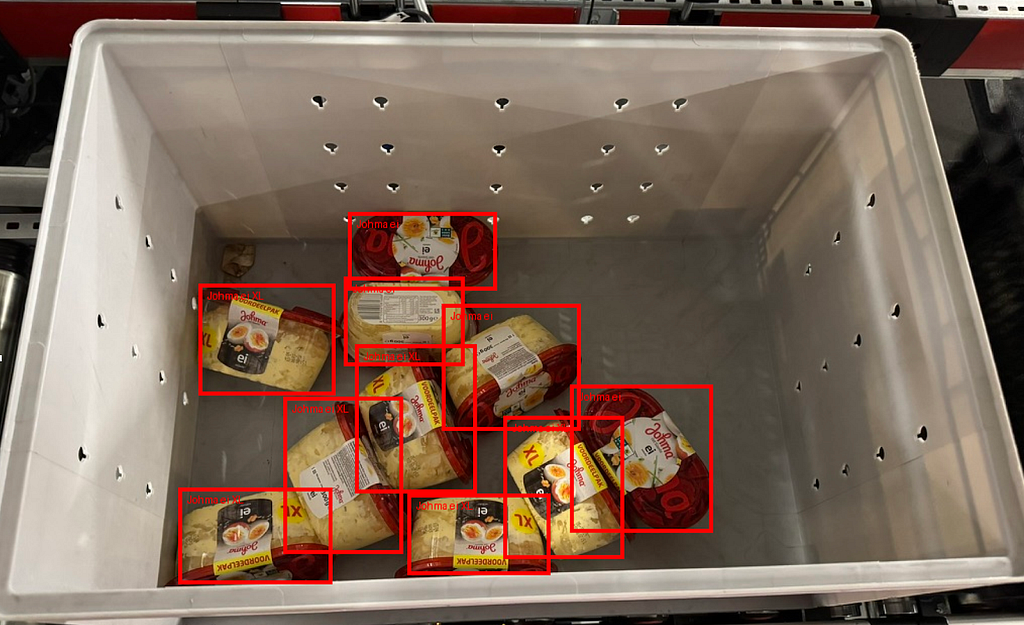

2. Stock totes feed the order totes. They are periodically counted by shoppers to keep the inventory data accurate. Counting interrupts the picking flow and therefore costs time and money. Vision can:

- Count items automatically and update stock.

- Highlight damaged, expired or incorrect items before they contaminate an order tote.

- Help quality-control teams to find totes that contain bad items, rather than sampling at random or relying on shoppers flagging issues.

The bottom-line impact is clear: fewer manual recounts, fewer delivery errors, happier customers!

Every Pixel Counts

Good vision AI starts with good visibility.

Before we can even start thinking about neural networks, we need to get the basics right: capturing the right image, at the right time, with the right metadata. That sounds simple, until you try doing it on a conveyor belt moving at 1.5 meters per second, with reflective packaging, awkward product angles, and totes that don’t always play nice with your lighting setup.

For a model to make accurate predictions, the raw input, the image in this case, needs to be clean, consistent, and traceable. That means each tote must be perfectly visible, with minimal motion blur, and paired with its unique barcode so we can match the visual contents to our inventory records. No blurry corners. No missed scans. No second chances.

Getting this right is non-negotiable. If the training data is noisy or the production feed inconsistent, even the best AI model will be guessing at best. Once this foundation is in place, we can confidently start training models that learn to spot squashed tomatoes, count apples, or flag that rogue yoghurt that snuck into the wrong bag.

Depth or Detail? Choosing the Right Lens

The first hardware decision to make is choosing a camera: do we favour a fast, high-resolution 2D sensor or invest in a depth-aware 3D unit?

A 2D camera is budget-friendly, can be installed straight onto the conveyor frame and has the ability to capture colour with great quality. Perfect for reading barcodes and spotting label mismatches. The trade-off is that it depends on the stable, uniform lighting we already engineered above the belt; pile two items on top of each other and a flat image can struggle.

A 3D camera adds a second channel of information, the distance to each pixel. That extra dimension separates overlapping products and makes annotation almost trivial, because depth draws a clear border around every object. Unfortunately the upgrade costs two to three times more, eats bandwidth and demands painstaking calibration.

For our pilot we stayed pragmatic: uniform lighting and reliable barcode readability pushed us toward the 2D route first, letting us feed sharp frames into whichever model family we test next. If later on we want to push for that last possible bit of accuracy, we already know which lens to reach for.

Thinking Locally, Acting Fast

An important design choice is deciding where the model should run its inference. Model inference can be done in the Cloud or on the Edge.

AWS defines edge computing as “bringing information storage and computing abilities closer to the devices that produce that information and the users who consume it”. Running inference on the edge, right next to the camera, reduces latency and bandwidth. It however also forces us to deploy and maintain more hardware in the warehouse, and development time can be long.

Thanks to devices such as the Nvidia Jetson we can mount a competent GPU directly inside the camera cabinet. That setup makes sense if the heavyweight part of the workload, the forward pass through the neural network, stays local.

A traditional baseline for local computer vision is YOLO, famous for real-time object detection. The latest version at the time of writing, YOLOv10, gives a neat balance between speed and accuracy, while keeping the code easy to tinker with. They are battle-tested and cloud-edge agnostic. The YOLO family however shines when latency is tight, which is not the case here. We are okay with waiting a few seconds before the result comes in, because the tote takes some time to travel to its destination. This gives the AI time to “think” about whether the tote is faulty in some way. If the AI detects something going wrong, action can be taken to adjust the stock inventory data, or sending the tote to a special station where a human can take a look at it. And when there is more time available to get to an answer, other techniques will probably get us a better accuracy.

One of such models is CountGD, a model specialised in counting objects in images. Benchmarks show that it could be a serious model upgrade in comparison to YOLO. It accepts a textual prompt (“apples”) and a visual exemplar, then returns the count. This new, hybrid approach can increase the quality of object counting a lot. The problem with CountGD however is that this model is not capable of doing anything else. It cannot classify damages, meaning we would need to run several models in parallel, where another model would detect defect products and another one would validate the product barcodes it can read.

Can Vision Language Models Spot a Squashed Tomato?

The alternative to running the model inference on the edge is to run a thin client on the edge that just captures images and sends them to the cloud for inference. Vision Language Models, or VLMs, are a specific type of multi-modal LLMs. Examples include OpenAI’s GPT family and Google’s Gemini models.

Until a few years ago, both performance and price were the biggest blockers for using these models at such a scale. They have however become way cheaper: the cost of running a VLM has dropped roughly 10× each year, amounting to a 1000× decline in the past three years according to the blog by Andreessen Horowitz.

At the same time, they have become way better. How to define better? There are of course benchmarks that have improved tremendously, like MMMU scores that are getting close to a 100% in only two years time. For a “true test”, we can of course also check the blog by Simon Willison on how well LLMs can create an SVG drawing a pelican on a bicycle!

So given that VLMs have become good ánd cheap already (and expectations are clear that the innovations won’t stop yet), betting on the VLM trajectory is tempting. It decouples us from on-premise GPUs and lets us scale model size without bringing in new hardware. Also, it is orders of magnitude faster to implement a VLM strategy than building our own proprietary models. It allows for blazingly fast prototyping and plug and play model upgrades over time.

Gemini already shows the shape of things to come: its spatial-reasoning quick-start notebook turns a single API call into object counts, bounding boxes and relationship descriptions. Feed it an image of a tote and Gemini will provide the bounding boxes of all products in it, including a description of faulty articles. All of this by just writing a simple prompt.

Of course VLMs are not perfect yet. One downside is that model inference is still pretty slow. During our own testing, Gemini 2.5 Flash took 3 to 4 seconds to respond. Optimising this should be possible with a shorter prompt, compressing images and/or caching. And with companies like Groq focusing purely on making LLMs faster or Fastino making blazingly fast LLMs for specific tasks only, faster inference also seems like a matter of time.

Models can also still hallucinate, and cost can increase quickly if volumes get high or tasks get more complex. It still requires careful development of prompts, forcing it to always return in the same format and making the VLM avoid common mistakes. Also, fine-tuning a VLM by showing it newly labelled training data is very hard and expensive at the time of writing. For text-based LLMs this is already possible for a while, so another example of expected innovation to be excited for.

Smarter Totes, Smoother Rides

Computer vision lets our automated fulfilment centre in Utrecht ditch blind spots and treat every tote as a data point. Order totes gain real-time checks for counts, bag placement and obvious mismatches, while stock totes get automatic audits that avoid manual stock recounts and detect damages before it spreads. A sharp 2D image plus a barcode is the ground truth our models need, and controlled lighting keeps that image crisp. We start with cost-friendly 2D cameras, where the images can be used in all possible AI solutions.

We then choose how we will power the AI: Jetson-powered edge boxes for ultra-low latency or a cloud route that rides the plummeting cost of multi-modal LLMs. YOLO could be our fast baseline, CountGD nails pure counting and cloud giants like Google’s Gemini can do it all through their mysterious black box.

Together these pieces form a pipeline that flags problems early, trims waste and delivers happier customers without slowing a single conveyor belt!

Stay tuned for part II where we will go in-depth on the implementation of the AI models, comparing current state of the art for counting, and how VLMs can be leveraged to solve this problem. We will challenge CountGD and the Google Gemini model lineup against the same dataset to see which counts products in a tote the best.

Adding Eyes to Picnic’s Automated Warehouses was originally published in Picnic Engineering on Medium, where people are continuing the conversation by highlighting and responding to this story.