Picnic 10 Years: 2021 — Expanding into France, and beyond

In our seventh year, Picnic launched in France — a milestone not just in expansion, but in rethinking how we scale supplier integrations globally.

By 2021, seven years after launching in the Netherlands, and two after entering Germany, the business proposition proved strong to continue unlocking Picnic’s untapped potential in France. It’s time to say Bonjour!

We’ve done this not once, but twice! Copy, paste, rinse, and repeat… or not?

Opening a new market requires an articulate and compelling plan, mindful of many aspects of our organization such as recruitment, equipment, legislation, and technical system support. As engineers, we’re fortunate to work alongside great colleagues at Picnic, allowing us to focus on the latter. Many teams from different domains need to be involved. In the year 4 edition of this blog series, we explained the technical challenges we faced launching in Germany. You can also find details about our internal Product Information Management system and how it benefited from using object-oriented design to accommodate a new market, which we’ll revisit later.

Picnic sits at the end of the product supply chain, delivering groceries directly to customers’ doors. As part of this chain, it’s crucial for our commercial business to partner with hundreds of product suppliers. These suppliers typically operate within specific countries.

Picnic negotiates agreements with selected suppliers, who then deliver products to our warehouses. As an industry practice, the supplier and buyer communicate master data, product orders, invoices, stock information, price updates via a technical interface. Without it, processes fall back to the good old emails and excel sheets, prone to human error and security flaws.

So, to launch in a new market, we first need to:

- Find suppliers in the market

- Collaborate with them to specify integration requirements

- Track progress through regular check-ins with suppliers

- Build and connect to the supplier’s API

Our team already had experience with this from our prior launch in Germany. From an infrastructure point of view, our systems are single-tenant, running separately for each market. Thanks to France being our third market, we could copy the deployments of systems with configuration change, allowing the team focus on supplier API and master data concerns. A few tech teams got involved in this project, ticking off milestones one by one, leading to our successful launch in France with a partnered supplier!

Bonjour!

This is not where the blog ends, but takes off!

Amidst all the croissant and baguette emojis flying around in our celebratory Slack posts 🥖🥐, one couldn’t help but wonder: what’s next? We were live in three countries. What happens when we’re in 100? We were working with a handful of suppliers via direct API connections. What would happen when we have 1000?

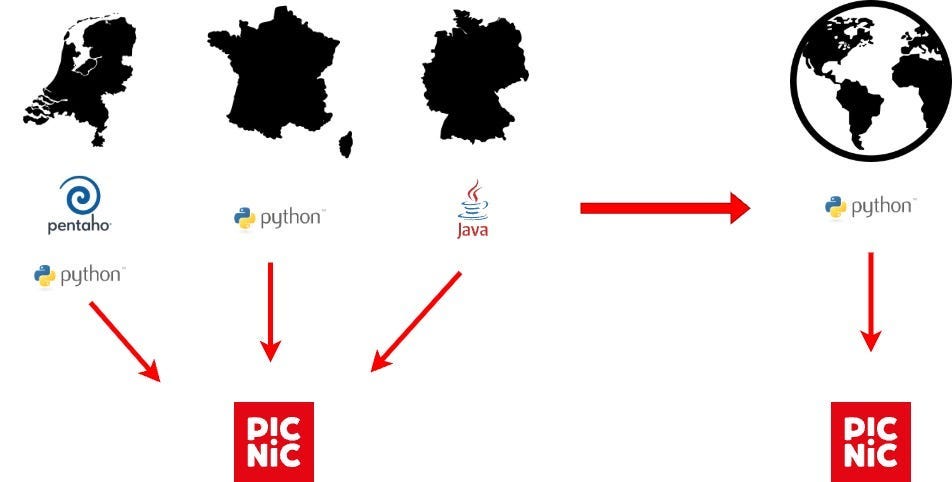

Would our hacky scripts and cron jobs written in different programming languages be sustainable then? Could we maintain consistent domain terminology across markets? Could we afford slow issue resolution? What was the projection of engineering hours needed — not only to launch a new market but also to maintain 100 existing ones?

Copy, paste, rinse, and repeat could only take us so far. There’s a certain level of engineering needed to come up with a strategy to invest into development of a brand-new future-proof software architecture. As with most software, our solutions needed to evolve alongside business success.

Not long after the France launch, Picnic had doubled down on growing operations in Germany. At that time, our integration only worked in a specific geographical area — the Rhein-Ruhr region. Expanding to the rest of Germany meant scaling our integrations. So, those earlier questions about sustainability and scalability had just become more relevant than prophetic.

Thankfully, decision-making informed by current business priorities, future goals, and technical excellence allowed us to find brilliant answers. This enabled us to mobilize towards innovative solutions.

Master Data Integration Challenges

We recognized the value of modelling our internal product master data early on at Picnic. The data resides within our MDM system, aka PIM (Product Information Management). Using an object-oriented design, over time we developed a consistent model for all master data components required by our operations and software.

The data that fuels our business can be summarized in three parts:

- Information about the consumer product itself. Users want to see ingredients, allergens, country of origin for products they inspect in the app.

- Information about the product supplier. The commercial team needs to know availability and pricing.

- Information about supply chain logistics. This covers packaging forms — crates, pallets — and how many consumer products Picnic receives when ordering these forms.

This robust model allowed us to tap into a new supplier’s master data model and convert it into our internal format. This is the principle behind our master data integrations. Their role in our software landscape is to connect to supplier APIs, transform data into the canonical model and ingest the information into the internal PIM API in a country-agnostic format.

However, certain problems in our master data solutions hindered the addition of new integrations. Some issues were known at the time; others discovered later:

- Scattered implementations: Legacy and shortcut solutions were written in various programming languages, making it difficult to operate and consolidate them.

- No central ownership: Different teams owned separate integrations for different countries and suppliers.

- Long lead time for every new supplier integration: Every new supplier required a carefully tailored approach to integrate the master data into Picnic systems.

- Shadow IT: As this was before our internal edge systems platform came into existence, some solutions didn’t use platform tooling and didn’t meet the quality standards raised back then. No observability, no alerting, no clear process.

- Inability to meet certain supplier requirements: Advanced features like pub/sub interfaces, caching older data, early reaction to faulty data, etc., couldn’t be supported by quick scripts.

- Inability to meet internal requirements: Our data warehouse lacked raw supplier master data analytics, blocking data-driven decision-making.

We were confident we weren’t suffering from “not-invented-here” syndrome. It was an informed decision to tailor our solution to our own and our suppliers’ needs, giving us full control over the pipeline.

This is where I personally come into the picture. Thanks to amazing domain leadership that took responsibility for large-scale plans, elevated personal and business ambitions, extended help whenever needed, brought clarity, and acted with optimism and humility, I was able to step into technical leadership at Picnic. Transitioning from software engineer, I was excited and determined to make this case a success.

We mobilized a team of Python engineers, blending internal developers from various teams with new hires. Our task was clear: we were the suppliers’ master data gateway into PIM. Our goal was to deliver secure, robust, scalable, and country-agnostic master data integration software, featuring traceable and observable data pipelines.

By adopting a shift-left strategy — investing in master data integrations early in our software landscape — we could frontload critical checks and successful interpretation of supplier master data, reducing friction downstream in PIM.

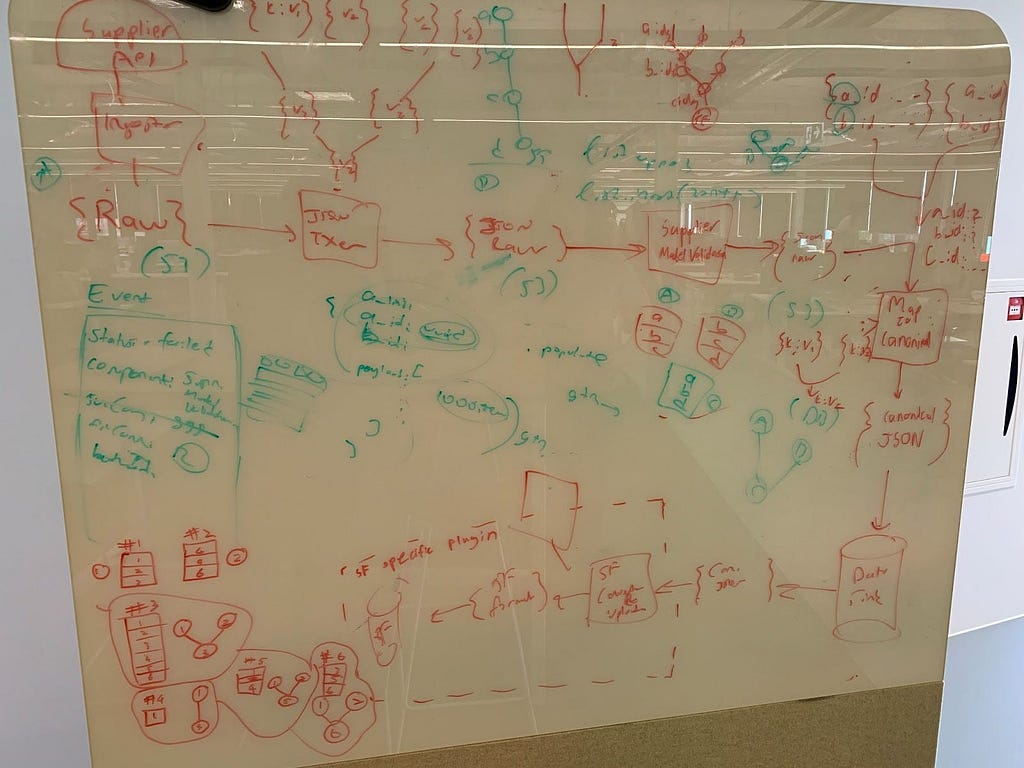

Revamping the entire master data landscape required a strong R&D culture and led to a greenfield project. Office brainstorm meetings in front of a whiteboard became our bread and butter. We ran proofs of concept to decide the best path forward. Pros and cons of alternative routes were clearly laid out, aiding objective decision-making within the team. We knew the timeline demanded kinetic action, so we started developing early. In an iterative fashion, we paved the way one brick at a time — sometimes removing a brick only to replace it with a different color, which is what working agile entails.

Re-architecting Master Data Integrations

At the time, functional requirements were fairly well understood and can be summarized as follows:

Data transport protocols:

- Suppliers use varying APIs, e.g. HTTP, pub/sub, SFTP.

- Data can be pushed from suppliers on updates or pulled regularly by us.

- Complete product data may come in multiple payloads, not just one.

- Certain product information requires prior context.

- Older updates might arrive after newer ones.

Data structure:

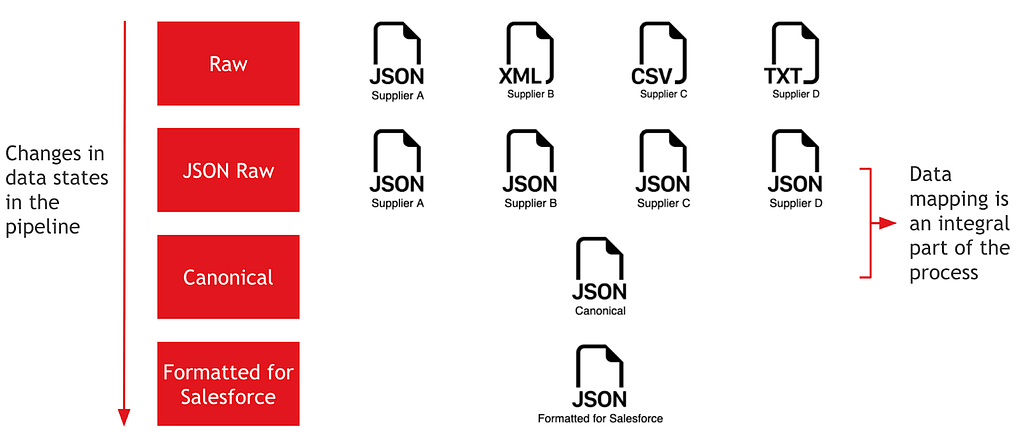

- Data arrives in different raw formats, e.g. JSON, XML, CSV.

- Supplier’s data schema is out of our control. Detect changes early.

- Updates may be atomic and sparse, not containing all information.

Data integrity:

- Sensitive commercial information must be encrypted.

- Support for non-ASCII characters is essential. After having to deal with the German ß or ü, we found even more of them in our French data 😅

Previous master data solutions provided some guidance on how to design the system. This helped us optimize the architecture early — but not prematurely. We could generalize the data model early, but not hastily. Quick proofs of concept boosted confidence, lifted spirits up and helped prevent sunk-cost fallacy by guiding informed investment decisions.

A key breakthrough was cementing country-agnostic data modelling at the architecture’s core. Essentially, data exists either in the supplier’s raw model or in our own country-agnostic canonical model. Since supplier representations aren’t always in an operable format, we convert them into JSON format. Then the canonical model is reformatted again before loading into Salesforce, powering our Product Information Management system. Such a supplier-agnostic canonical model enables consistency across markets, simplifies the core master data system, and protects Picnic data from supplier modelling choices.

This breakthrough helped fix clear domain boundaries, each of which eventually turned into a loosely coupled microservice:

- Raw master data consuming: Connect to supplier API.

- Raw master data preprocessing: Convert to JSON and optionally apply further preprocessing like caching.

- Master data canonicalization: Transform data into the country-agnostic canonical model.

- Master data materialization: Update master data records with atomic sparse updates, ensuring idempotency and that only newer updates override older ones.

- Master data loader: Push master data to Salesforce.

- Master data analytics: Propagate raw and canonical master data to the data warehouse, empowering analysts in decision-making.

The system uses an event-driven design where master data flows across microservices. Each microservice is based on a shared framework, implemented in several Python packages. They adhere to AMQP and utilize RabbitMQ as the messaging broker to consume, process, and produce messages containing master data.

This divide-and-conquer approach made the task more manageable and allowed us to deliver an MVP early on. Each microservice had its own backlog with prioritized tasks. The MVP also gave us the chance to test resilience in unexpected scenarios:

- Are failed messages alerted on?

- What happens to messages on failure?

- Can messages be replayed from the archive back into the message broker queue?

Months passed as we developed the system from scratch. Meanwhile, we connected our development/testing environments to suppliers’ testing environments in Germany (with a pub/sub API) and began processing test master data for additional German regions, such as North and South-West Germany, alongside Rhein-Ruhr.

The Moment Theory Became Practice

While the greenfield project progressed as planned and timelines to launch Picnic in new German territories seemed feasible, a sudden new business priority emerged: integrate a new supplier in France within 6 weeks. The new system wasn’t fully built yet. We had to decide whether to build this supplier integration in the legacy way or the new system.

Truthfully, having such a “big project” with a short timeline might feel daunting. However, the team’s sentiment was the opposite. This was our moment to reap the benefits of the new architecture and make a business impact — starting in France, rather than Germany as planned! We planned sprints ahead, shifted focus to the new French supplier, and said Bonjour once again 🥖🥐!

Thanks to most of the system operating on canonical data, we only needed to:

- Create a new Master Data Consumer service to collect data from the new supplier’s SFTP server.

- Convert the raw CSV (including French non-ASCII characters) to JSON.

- Transform only the minimally required data to canonical form.

This meant most functionalities came out of the box, dramatically reducing lead time for the new supplier integration.

Together with other teams redesigning their supplier integration solutions and tackling one of the biggest bottlenecks in master data ingestion, we succeeded — delivering the French supplier integration two days before the deadline. Adding master data from a new supplier into PIM and extending purchase ordering and invoicing to cover that new supplier is a complex task. Yet, users could enjoy a quality assortment, high availability, and completeness in their orders without major interruption.

The following year, we wrapped up the integration of a new German supplier and pushed the big red button to launch the new system in Germany as well. We consolidated all active legacy integrations into the new system and eventually retired all legacy solutions. Today, all master data ingestion at Picnic flows through a single system.

Conclusion

The year 2021 marked Picnic’s launch in France and the birth of a new idea that grew into a dedicated system and team overseeing master data integrations.

In our CTO’s blog post kicking off this series, he mentions embracing the “slow down to speed up” principle. This project is a perfect example where adhering to that principle paid off. Investing in a greenfield project gave us full control of supplier integrations by centralizing and consolidating them in a single framework — significantly reducing lead times for every new supplier integration.

After leading this team and managing the system for 2.5 years, I transitioned into a new leadership role focused on warehouse systems. My new team now benefits from the high-quality data provided by the master data integration system! This work wouldn’t have been possible without the team’s relentless curiosity, resilience under pressure, and cross-domain collaboration.

Although this blog post concludes here, it’s comforting to know that Picnic’s master data integration platform is processing important updates even as you read this!

TL;DR

- 🇫🇷 Launched in France by reusing and adapting Germany’s playbook

- 🔄 Rebuilt supplier integration from scratch for scale

- 🧱 Microservice architecture powers all supplier master data ingestion

- 📊 Enabled real-time, reliable, and country-agnostic data pipelines

Picnic 10 Years: 2021 — Expanding into France, and beyond was originally published in Picnic Engineering on Medium, where people are continuing the conversation by highlighting and responding to this story.